If you’ve bought a television, played a video game, used a streaming service, or watched a Blu-ray anytime in the past several years, you’ve likely seen the terms HD and HDR.

You probably know that these things are superior to the SD quality standard of the past, but your understanding of HD and HDR might stop there. That’s okay—these two terms are a little confusing because of how close in name they are. Thankfully, there’s a clear and concise way to outline the differences between the two.

We’ll begin by outlining the key differences between HD and HDR, then place their respective specs side-by-side to better illustrate what makes them distinct. From there, we’ll provide an overview of their histories to help convey how they both came to be.

After that, we can weigh the pros and cons of HD and HDR and ultimately determine which of the two is the most important to your television today. In the end, both sides of the HD vs. HDR debate will be made clear.

Let’s get started!

HD vs. HDR: Key Differences

HD and HDR are similar in the sense that both terms pertain to television quality standards, but they are quite different in the way they actually relate to TV quality overall.

Let’s take a look at these key differences and outline them clearly below.

Origin

Before anything else, let’s take a look at the origin of HD and HDR.

HD emerged in the late 1930s as an electronic scanning format before the world’s display technologies shifted from analog to digital. From this point forward, HD was reborn alongside SD as a way to measure the number of pixels on a television screen.

HDR, on the other hand, did not emerge until 2014 with the creation of Dolby Vision: an innovative new technology used to increase the perceived quality of HD displays. Essentially, HDR would not have originated if not for HD’s existence. You couldn’t have HDR without first having HD.

Function

Perhaps the greatest difference between HD and HDR is function. Essentially, how do these two terms work?

HD functions as a way to increase the on-screen detail over SD, while HDR functions as a way to increase the colors of those details over non-HDR displays. High-definition relates to the number of pixels that make up the television display, while HDR relates to the range of colors contained within those pixels.

The function of HD is to increase quality, while the function of HDR is to increase colors. Clearly, in spite of their similar names, these are two entirely different functions.

Importance

Another important distinction to make between HD and HDR is that of importance. Which one actually matters more, the former or the latter?

Objectively speaking, HD takes precedence over HDR. This is because HD has to do with the number of pixels and HDR has to do with the color of said pixels.

At the end of the day, it’s more essential to have a greater number of pixels than to have more colorful-looking pixels. If you had an SD-quality television with HDR pixels, you’d still be looking at a standard definition television that failed to look as high quality as an HD TV without HDR pixels.

A Side-by-Side Comparison: HD vs. HDR

| HD | HDR | |

|---|---|---|

| First Standardized | 1988 | 2014 |

| Meaning | High definition | High definition range |

| Uses | Television, film, home video, streaming, gaming | Television, streaming, home video, gaming |

| Formats | 720p, 1080p, 2K | HDR10, HDR10+, Dolby Vision, HLG |

| Display | 16:9 aspect ratio | At least 1,000 nits |

| Predecessor | Standard Definition (SD) | Standard Dynamic Range (SDR) |

5 Must-Know Facts About High Definition

- Even though it seems like everyone just got on the same page about HD, display technology is already looking toward the future with 8K televisions.

- There is currently no standard definition or set meaning for the phrase “high definition.” As such, it’s been used as far back as the 1930s.

- In HDR photography and videography, cameras capture multiple images at once at various different exposures and combine them into one to get a more vibrant color palette.

- HD predates HDR by almost 30 years.

- HD displays are measured in pixels and aspect ratios. HDR displays are measured in nits.

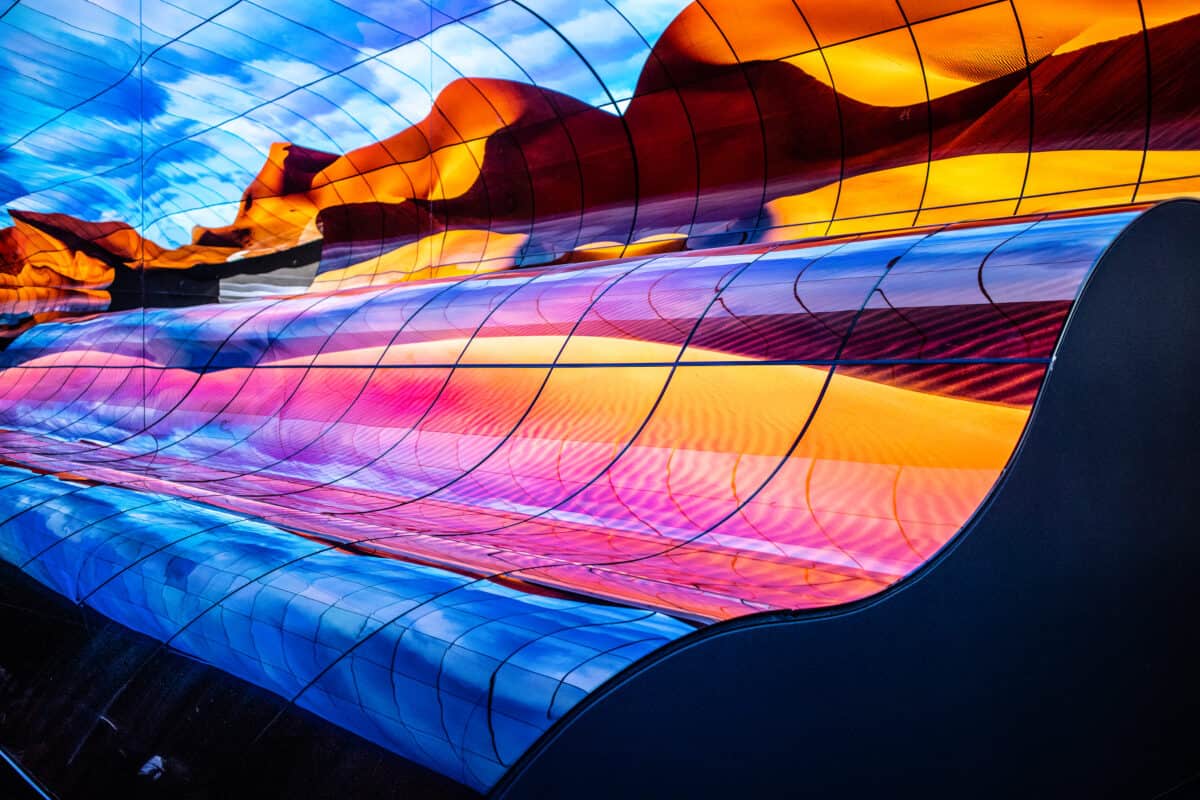

©Grzegorz Czapski/Shutterstock.com

The History of HD

While first coined in 1936 during experiments with analog television, today’s definition of HD—a.k.a. high definition, or HDTV—refers exclusively to the digital video display technology that comes after SDTV. First developed in the late 1980s and early 1990s, significant marketable HD technology wasn’t truly embraced until the late 1990s.

Much of the delay had to do with the need for compressed video, which wasn’t perfected until 1988 and wasn’t widely adopted until nearly a decade later. At this time, Digital Video Broadcasting—or DVB—defined HD as any video resolution between 720 and 1080p.

HD might not be the format of the future—that honor is bestowed on 4K UHD, or 2160p ultra-high definition—but it’s undoubtedly the format of the present. Today, nearly every commercially sold computer is considered HD. The same goes for the majority of televisions sold.

Digital cameras are another example, with nearly all available offerings on the market falling under the HD standard. It’s what consumers expect today—anything less would be unacceptable due to television, streaming, gaming, and home video standards.

Despite being bested by UHDTV’s sheer quality, HDTV remains the industry’s standard for several media types, including Blu-rays, television broadcasts, and even some streaming services. It all has to do with accessibility and price—HD is still far cheaper and far more readily available than UHD, despite the latter’s superior quality. Given how long it took the world to get on the HD bandwagon, it’s not surprising to see that HD has remained the most popular digital video format long after the creation and release of UHD.

Even though it seems like everyone just got on the same page about HD, display technology is already looking toward the future with 8K televisions.

©iStock.com/Robert Daly

HDR Explained

High-dynamic-range (HDR) is a name you’ve likely seen pop up before. Whether it be your television, your streaming service of choice, or the latest video game, HDR is important, but somewhat confusing in comparison to HD.

While its name might suggest that HDR is a successor to HD, the truth is that HDR is not a digital resolution but rather a display standard. If this terminology sounds confusing, think of it this way: HD is a term that refers to the number of pixels in a display, while HDR is a term that refers to the color gamut of those pixels.

In short, HDR alters the way a video signal represents the luminance and the colors of the video image being displayed. An HDR signal enables brighter-looking, more colorful, and detailed highlights, complete with a much more diverse range of vibrant colors and much darker and more intense shadows.

In other words, HDR makes for a higher quality image without actually making any improvements to the properties of the display. HDR doesn’t actually improve brightness, contrast, or color, it simply creates this illusion of sorts.

For this reason, no two competing HDR formats will look the same, even when playing the same content. Likewise, no two HDR formats will boast the same specific capabilities. These different formats range from HDR10 to HDR10+, Dolby Vision to HLG, and several other formats along the way. The only real similarities across the board are a shared peak luminance of 10,000 nits and a shared bit depth of 10. Beyond this, the competing HDR formats are free to tweak and adjust their capabilities in whatever way they please.

Most HDR TVs are also 4K, but some have achieved HDR with HD, as well.

In HDR photography and videography, cameras capture multiple images at once at various different exposures and combine them into one to get a more vibrant color palette.

©Ivanko80/Shutterstock.com

Pros and Cons of HD and HDR

| Pros of HD | Cons of HD |

|---|---|

| Far superior to SD quality | Falls short of 4K UHD quality |

| HD visuals are further improved by HDR | More expensive than SD quality |

| Often much cheaper than UHD displays | Not compatible with some of your older tech |

| HD uses less power than UHD | HD files take up more space than SD |

| Pros of HDR | Cons of HDR |

|---|---|

| Markedly improves the looks of an HD or UHD display | Not all HD televisions include HDR |

| Typically comes automatically equipped with 4K UHD discs and the latest 4K TVs and gaming consoles | Often exclusive to 4K UHD, to the frustration of HD TV owners |

| No one HDR standard means there are many great options available, from HDR10 to Dolby Vision and several others in between | Costs more money for tech to be HDR equipped |

| Becoming more affordable lately | Makes SD and DVD technology seem obsolete |

HD vs. HDR: Which is Better?

So, now that the differences between HD and HDR have been made clear, which is truly superior?

Ultimately, the question isn’t so black and white. We know HD and HDR are both improvements over the SD standards of the past. Also, we know that HD and HDR are becoming more and more affordable these days. Likewise, we know that HD and HDR are not mutually exclusive. You can have an HD TV without HDR, but you cannot have HDR without also having an HD display. For this reason, it only seems fair to say HD is better than HDR.

It’s about more than just the simple fact that HD can exist without HDR. It also has to do with the many minor issues with HDR that still need to be ironed out. HDR can occasionally make human skin appear sickly or off-colored on-screen. It can also do the same with other hard-to-replicate colors, such as sunsets or other natural phenomena. The same goes for fast-moving images like car chase scenes or sporting events, where HDR struggles to keep up.

In the end, HD isn’t responsible for these problems because it’s only there to display images—it has nothing to do with the altered color gamut of HDR.

NEXT UP…

- LG C2 vs B2: Which OLED TV Is the Better Value?

- Roku Ultra vs Ultra LT: Top 3 Key Differences and Full Comparison

- The 6 Best Routers for Streaming Netflix, Twitch, and More