3 Facts About Microprocessor

- Microprocessors were initially called “Microcomputer on a chip” until 1972.

- You need at least two (2) months to create a microprocessor.

- Microprocessors are made from quartz, metals, silicon, and other chemicals.

Microprocessor History

Microprocessors are computer processors containing logic, arithmetic, and control circuitry needed for a computer’s CPU to function correctly. In essence, a microprocessor is a multipurpose, register-based, clock-driven, digital integrated circuit that accepts and processes binary data as input instructions, stores such instructions in its memory, and provides results — in binary form — as its output.

Inarguably, the introduction of low-cost computer-based integrated circuits has positively transformed modern society. Microprocessors, since their advent, have been helpful for multimedia display, computation, internet communication, text editing, industrial process controls, etc.

Quick Facts

- Created

- January 1971

- Creator (person)

- Federico Faggin, Stanley Mazor, Marcian (Ted) Hoff, and Masatoshi Shima

- Original Use

- For computational tasks

- Cost

- $60

When exactly was the microprocessor invented? Answering this question will cause us to stumble upon again into the same story as with the inventions of the integrated circuit, the transistor, and many other gadgets, reviewed on this site. Several people got the idea almost simultaneously, but only one got all the glory, and he was the engineer Ted Hoff alongside the co-inventors Masatoshi Shima, Stanley Mazor, and Federico Faggin at Intel Corp., based in Santa Clara, California.

The microprocessor CPUs were built in the 1950s and 1960s with many chips or a few LSI (large-scale integration) chips. In the late 1960s, many articles discussed the possibility of a computer on a chip. However, all concluded that the integrated circuit technology was not yet ready. Ted Hoff was probably the first to recognize that Intel’s new silicon-gated MOS technology might make a single-chip CPU possible if a sufficiently simple architecture could be developed.

In 1990 another U.S. engineer and inventor—Gilbert Hyatt from Los Angeles, after a 20-year battle with the patent office, announced that he had finally received a certificate of intellectual ownership for a single-chip microprocessor, that he says he invented in 1968, at least a year before Intel started (see the U.S. patent №4942516). Hyatt asserted that he put together the requisite technology a year earlier at his short-lived company, Micro Computer Inc., whose major investors included Intel’s founders, Robert Noyce and Gordon Moore. Micro Computer invented the digital computer that controlled machine tools, then fell apart in 1971 after a dispute between Hyatt and his venture-capital partners over sharing his rights to that invention. Noyce and Moore developed Intel into one of the world’s largest chip manufacturers. “This will set history straight,” proclaimed Hyatt. “And this will encourage inventors to stick to their inventions when they’re up against the big companies.” However, nothing came out of Hyatt’s pretensions for pioneering and licensing fees from computer manufacturers.

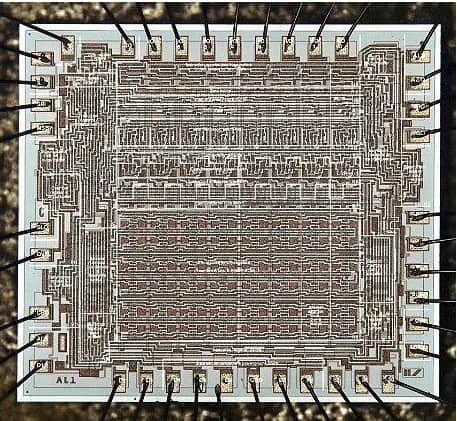

In 1969, the Four-Phase Systems, a company established by several former Fairchild engineers, led by Lee Boysel, designed the AL1—an 8-bit bit-slice chip containing eight registers and an ALU (see the photo below). At the time, it formed part of a nine-chip, 24-bit CPU with three AL1s. Actually, the AL1 was called a microprocessor much later (in the 1990s) when, in response to litigation by Texas Instruments, a demonstration system was constructed where a single AL1 formed part of a courtroom demonstration computer system, together with an input-output device, RAM and ROM. AL1 was shipped in data terminals from that company as early as 1969.

The term “Microprocessor” was first used when the Viatron system 21 small Computer System was announced in 1968. Since the 1970s, the use of microprocessors has consequently increased, cutting across several use cases.

Microprocessor: How It Worked

The microprocessor’s working principle follows the sequence: fetching, decoding, and execution.

Technically, at first, the microprocessor sequentially stores inputs as instructions in the computer’s storage memory. Afterward, it fetches the stored instructions and decodes them. The decoded instructions are executed until the microprocessor meets a STOP instruction. Finally, the results are sent in binary form as output via the output port after execution.

To further understand how a microprocessor works, you must familiarize yourself with specific terms associated with the device. These basic terms include:

Instruction Set: These are sets of commands understandable by the microprocessor. The instruction set is typically an interface between the computer software and hardware.

Clock Speed: This is the number of operations a microprocessor can perform in a second, expressed in Hertz or its multiples. Clock speed is also otherwise called Clock Rate.

IPC: Instruction Per Cycle, IPC, measures the volume of instructions a computer’s central processing unit can execute in a single clock.

Bandwidth: This is the number of bits the microprocessor can process in a single instruction.

Bus: These are conductors used to transmit data, control, or address information in different microprocessor elements. Generally, there are three (3) basic types of buses, address bus, data bus, and control bus.

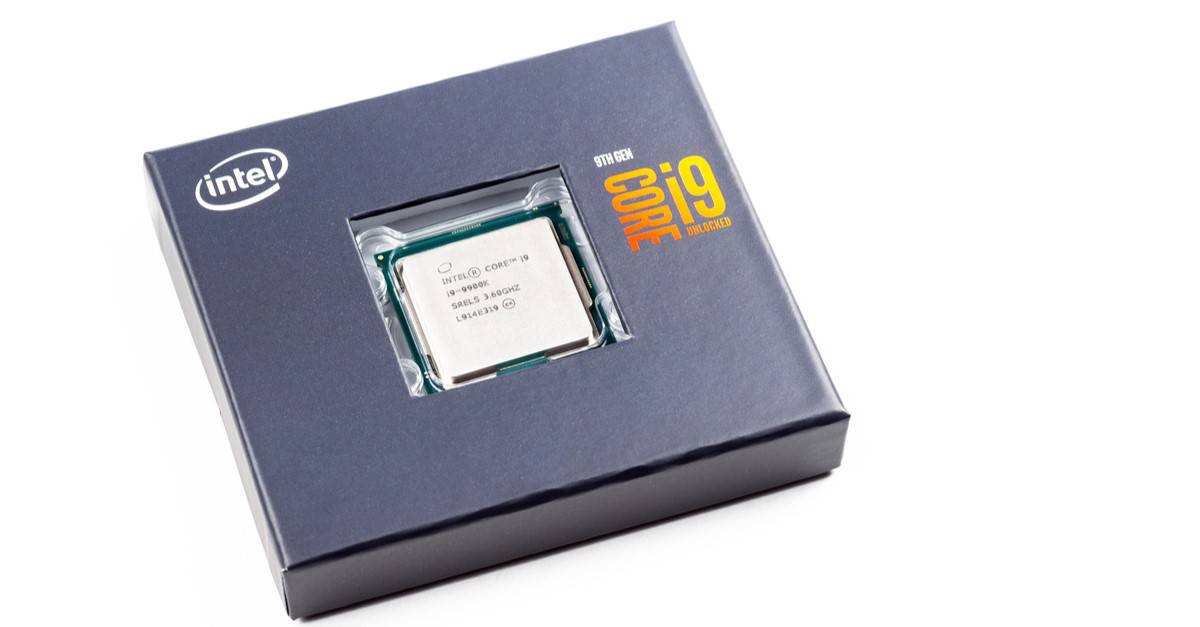

Microprocessor: Historical Significance

The first working CPU was delivered to Busicom in February 1971. It was called “Microcomputer on a chip” (the word microprocessor wasn’t used until 1972). The first known advertisement for the 4004 dates back to November 1971; it appeared in Electronic News. The first commercial product to use a microprocessor was the Busicom calculator 141-PF. After Intel delivered the four chips, Busicom eventually sold some 100000 calculators. Cleverly, Intel decided to buy back the design and marketing rights to the 4004 from Busicom for $60000. Intel followed a clever marketing plan to encourage the development of applications for the 4004 chip, leading to its widespread use within months.

The first 8-bit microprocessor was manufactured again by Intel, this time under a contract of another company — Computer Terminals Corporation, later called Datapoint, of San Antonio, TX. Datapoint wanted a chip for a terminal they were designing. Intel marketed it as the 8008 in April 1972. This was the world’s first 8-bit microprocessor, but the chip was rejected by CTC as it required many support chips.

In April 1974, Intel announced its successor, the world-famous 8080, which opened up the microprocessor component marketplace. With the ability to execute 290000 instructions per second and 64K bytes of addressable memory, the 8080 was the first microprocessor with the speed, power, and efficiency to become a vital tool for designers.

Development labs set up by Hamilton/Avnet, Intel’s first microprocessor distributor, showcased the 8080 and provided a broad customer base which contributed to it becoming the industry standard. A critical factor in the 8080’s success was its role in the introduction in January 1975 of the MITS Altair 8800, the first successful personal computer. It used the powerful 8080 microprocessor and established that personal computers must be easy to expand. With its increased sophistication, expansibility, and incredibly low price of $395, the Altair 8800 proved the viability of home computers.

Admittedly Intel was the first but not the only company for microprocessors (see the Timeline of Intel’s Microprocessors). The competing Motorola 6800 was released in August 1974, the similar MOS Technology 6502 in 1975, and Zilog Z80 in 1976.

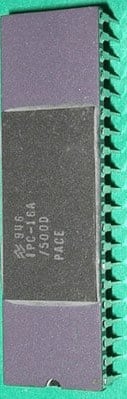

The first multi-chip 16-bit microprocessor was the National Semiconductor IMP-16, introduced in early 1973. An 8-bit version of the chipset was introduced in 1974 as the IMP-8. During the same year, National introduced the first 16-bit single-chip microprocessor, the PACE, followed by an NMOS version, the INS8900.

The first single-chip 16-bit microprocessor was TI’s TMS 9900, introduced in 1976, which was also compatible with their TI-990 line of minicomputers. Intel produced its first 16-bit processor, the 8086, in 1978. It was source consistent with the 8080 and 8085 (an 8080 derivative). This chip has probably had more effect on the present-day computer market than any other, although whether this is justified is debatable; the chip was compatible with the 4-year-old 8080, and this meant it had to use a most unusual overlapping segment register process to access a full 1 Megabyte of memory.

The most significant 32-bit design is the MC68000, introduced in 1979. The 68K, as it was widely known, had 32-bit registers but used 16-bit internal data paths and a 16-bit external data bus to reduce pin count and supported only 24-bit addresses. Motorola generally described it as a 16-bit processor, though it clearly has a 32-bit architecture. The combination of high performance, ample (16 megabytes (2^24)) memory space, and relatively low costs made it the most popular CPU design of its class. The Apple Lisa and Macintosh designs used the 68000, as did a host of other designs in the mid-1980s, including the Atari ST and Commodore Amiga.

The world’s first single-chip fully-32-bit microprocessor, featuring 32-bit data paths, 32-bit buses, and 32-bit addresses, was the AT&T Bell Labs BELLMAC-32A, with first samples in 1980 and general production in 1982.

The image featured at the top of this post is ©Maksim Shmeljov/Shutterstock.com.