The modern CPU or Central Processing Unit is basically the “brain” of a computer. It is responsible for processing all the instructions in a computer program, executing functions, and performing calculations. The CPU is an essential component of modern technology, and without it, computers and electronic devices would not exist as we know them today. The foundations of CPUs were at first large and cumbersome, not to mention very expensive. However, over the years technological advances led to smaller and more efficient CPUs that can process data at incredible speeds.

The Modern CPU: Where Did it Come From?

Even if you are unfamiliar with the idea of what a CPU is, you have probably benefited from the technology more times than you can imagine. The fact that you are reading this article is a good indicator you are taking advantage of a CPU as part of a computer, smartphone, tablet, or some other type of device that connects to the internet.

Computers are intertwined as part of our lives today and the backbone of every computer is the CPU or Central Processing Unit. This (now) tiny part of our lives has a few different definitions but can largely be defined as a component that includes a variety of electronic circuitry that will execute any series of instructions provided by a computer program. The history of this technology is one of the ages and like the automobile, has become one of the most important inventions in modern history. Here’s a look at the history of the modern CPU and how its explosion in use has brought the world together in ways once never thought imaginable.

Quick CPU Facts

| Year Founded | 1971 |

| First Processor | Intel 4004 |

| Original Use | Cash registers, teller machines, calculators |

| Key Companies | Intel, Busicom, AMD, IBM, Motorola, Qualcomm, NVIDIA |

| Today’s Cost | $150 upwards |

| Notable Products | Computers, smartphones, tablets, cars, thermostats, televisions |

The 1970s

The history of the modern CPU begins with the famous Intel 4004 processor, commonly known as the world’s first commercial processor. Understandably, the modern CPU and Intel go hand-in-hand as Intel is mostly responsible for all of today’s modern-day CPU achievements.

Intel 4004

The Intel 4004 was a 4-bit processor and a joint project between Japan’s Busicom company and America’s Intel. As the first CPU developed as a single chip, the Intel 4004 was made possible by huge advancements in silicon gate technology that allowed work to increase the performance of the CPU fivefold over previous processors. Its first use was in a Busicom calculator and would later come to products like teller machines at banks and cash registers. The world would also see the Intel 4004 arrive as one of the first game-focused CPUs in the form of a microprocessor-controlled Pinball game in 1974.

Intel 8008 and 8080

After the release of the Intel 4004, work quickly shifted to its successor which would become the Intel 8008. The 8008 unsurprisingly doubled performance over the 4004 (hence the number) and would be the world’s first 8-bit CPU that arrived with 16KB of memory. Even as the 8008 saw limited use in both Japan and America, its successor, the Intel 8080 would do far more for the history of the modern CPU.

The Intel 8080 is often remembered for its many consumer uses like cash registers and printers but also for some of its more military-based use cases. The 8080 is considered the first CPU to be used with cruise missiles just as much as it would become the baseline for the personal computing system. Its widespread use will help the Intel 8080 be remembered as the CPU/microprocessor that saw major integration into various products around the world.

The follow-up to the 8080, the Intel 8086 would become the first x86 processor in Intel’s history and IBM was so impressed by its performance that it included it in the first IBM home computer.

MOS Technology 6502

Developed by the now-defunct Mos Technology brand in the 1970s, the Mos Technology 6502 is a microprocessor with a wonderful history especially if you are a gamer. Upon its introduction in 1975, the 6502 was by far the least expensive CPU on the market and it wasn’t even a close comparison when looking at Intel. In fact, it sold for less than 1/6th the cost of the Intel 8080, a considerable difference.

Where the Mos Technology 6502 will best be remembered is its impact on the video game industry. Popular consoles like the Apple II, Atari 2600, Commodore 65, Atari Lynx, BBC Micro, and even the Nintendo Entertainment System used the 6502 as developed or modified in some way to fit the console design. The chip was so successful that a follow-up was designed by a company known as Western Design Center in 1981 with the 65C02 and saw such huge production in the hundreds of millions that it’s still in use today.

Motorola 68000

Potentially the last, truly notable chip of the 1970s is the Motorola 68000 which was released in 1979 after being developed by Motorola. A 32-bit chip, the 68000 was very easy to program and because of this, quickly saw widespread adoption including use in personal computers like the early Apple Macintosh as well as video game systems like the Amiga and Atari ST. Even the Sega Genesis (Mega Drive) launched with the Motorola 68000.

The 1980s

Intel 80386

One of the most important processors that helped shape the 1980s was the Intel 80386 as this microprocessor was capable of 16MB of RAM and 32-bit performance This additional RAM allotment marks a turning point in CPU history where multitasking became a reality. Think of how we can keep multiple windows open at the same time with a browser, a spreadsheet, a notepad, and more. It’s this processor that is to thank for something we take for granted every day.

All of this is because of the Intel 80386 and its advancements. Unfortunately, at the time, DOS, the current operating system of the day, was unable to utilize a multitasking process but today, both Windows and macOS are multitasking staples.

ARM1

Jumping ahead to 1985, we’re moving away from Intel into what would become the second-biggest name in the microprocessor and CPU space. This year is the first time ARM comes into the processor space with the ARM1, which was developed by Acorn computers and used in the BBC Micro computer. Apple also saw that Acorn was on the right path with its processor and would reach out to the company to help build a new processor for its ill-fated personal digital assistant, the Apple Newton.

Motorola 68020

Motorola was also looking to remain competitive in the processor space and released its own version of a 32-bit processor with the 68020. Designed to be twice as fast as its original Motorola 68000, development began in 1984 and initially received a ton of hype including a dedicated article in the New York Times discussing its eventual launch. After a few more years of development, the Motorola 68020 would go on to see massive usage across the Apple Macintosh II, Amiga 1200, and Hewlett-Packard 8711. The chip was so widely used, it also saw inclusion into a Eurofighter Typhoon aircraft at the time to assist with flight control.

Both Intel, Motorola, and Acorn would continue to release incremental processor improvements over the remainder of the 1980s. Improvements were nominal at best and it wouldn’t be until the 1990s that the CPU and microprocessor industry would leap forward in advancements.

The 1990s

The decades preceding the 1990s were filled with competition between IBM, Compaq, Apple, Commodore, and other computer manufacturers all looking to grab a stronghold in the market. However, it wasn’t until the 1990s that the computer landscape started to take shape and in many ways, laid the groundwork for the CPU industry we know today.

Intel i386

Intel’s release of the i386 processor in the early 1990s marked the first time a 32-bit microprocessor was commercially available. This processor was available to any computer manufacturer that had a partnership with Intel and would help significantly grow the computer industry during this time. The follow-up, the Intel i486 would be the last of Intel’s i series of processors and would mark a significant improvement in data transfer feed and onboard memory cache.

Intel Pentium

The release of the Intel Pentium Processor on March 22, 1993, is one of the most pivotal moments in personal computing history. Capable of executing two separate instructions with every clock cycle, the Pentium line would dramatically change how multimedia content was handled and provide significant leaps forward in using personal computers for entertainment. The Intel Pentium family of CPUs was large and ran across a variety of computer systems that ran Windows 3.1, DOS, Unix, and OS/2 and came in a variety of different clock speeds, all of which had separate costs for the computer hardware makers.

The update to the Intel Pentium, the Pentium Pro occurred in 1995 and was a RISC chip and had a clock speed of 200MHz, the fastest CPU available in a home computer up until this time period. Its speed was more than the average user would need at the time and Intel focused largely on business needs but it didn’t matter, Intel was able to continue to solidify its position with the Pentium Pro as the premier CPU manufacturer.

Intel Pentium II and III

In 1997, the Pentium II would double the Pentium Pro’s clock speed to 450Mhz and was small enough to help computers start to size down a little from their already oversized hardware. However, it was the Pentium III that would change the game for Intel in 1999 when it finally achieved a previously unheard-of clock speed of 1GHz.

Intel Celeron

Even as Intel doubled down on its Pentium microprocessor as its flagship unit, to help offset AMD’s lower price, Intel would introduce the Celeron CPU. Still available today, the Celeron would strip out some of the Pentium’s best aspects but still perform admirably for everyday tasks. Today, the Intel Celeron remains the company’s less expensive choice when it comes to releasing lower-priced machines that do not need the horsepower of its Intel Core iX CPUs.

AMD AM386

While Intel’s dominance grew during the 1990s, that didn’t mean its competitors were standing still. AMD released the AM386 processor in 1991 which saw equal performance to Intel processors of the time but cost significantly less money. AMD set itself apart from Intel by providing better power at a lower cost. The rest of the 1990s were mostly a game of Intel creating new CPUs and then AMD reverse-engineering them at a less expensive cost. Very much a game of cat and mouse.

AMD Athlon

As AMD had spent the better part of the 1990s chasing after Intel’s success, the release of the AMD Athlon K7 in June 1999 saw a dramatic turn of events. The release of the Intel Pentium III had some technical issues that saw its best-performing models underperform whereas the Athlon was a huge success. AMD would quickly catch up to Intel by hitting its own 1GHz mark on January 6, 2000, which would set off the AMD vs Intel race we know to be alive and well today.

Computers Going Mainstream

It’s important to note that in the history of the modern CPU, it was the late 1990s when CPUs were powerful enough to help truly bring personal computers into every home. Not only were new interfaces being designed by the likes of Microsoft to make computers more friendly to use, but costs were coming down enough to not make them feel like only the wealthy could afford to have more than one.

The 2000s

The early part of the 2000s was marked by Intel and AMD releasing incremental increases in both its Pentium and AMD’s renamed Athlon processors. Both companies would look to expand clock speed going from 1GHz to 1.3 to 1.86GHz and so on. This would continue for the first part of the decade until April 2006 when Intel released its first major upgrade, the Pentium lineup.

Intel Core 2 Duo

Upon the release of the Intel Core 2 lineup, the Pentium brand was downsized to be more of a mid-range product instead of Intel’s flagship. Both the Core 2 Duo and Core 2 Quad significantly improved on Pentium CPU architecture and could significantly outperform even the fastest Pentium CPU at its fastest clock speed.

Intel would claim that the Core 2 lineup was 20% faster than its best Pentium CPUs and was ideally sized to work not just on desktops, but also on a huge number of laptops. Intel claimed that the Core 2 Duo was the best chip to convert music from CD to MP3 format, play games and offer high-definition audio. The release of this Core 2 CPU marked the first time since the release of the AMD Athlon that Intel would take back the CPU performance throne.

Intel Atom

Thriving on the success of the Intel Core 2 Duo lineup, Intel would look to capitalize on its success during this era with less powerful machines. By introducing the Intel Atom, Intel was successful in bringing this processor over to the popular albeit short-lived era of Netbooks and nettops. Better known as the Bonnell architecture, CPUs like the Atom N450 were hugely successful for a time and while they were ultimately not successful in staying afloat as CPUs today, it introduced a new opportunity for less expensive processors that help power all kinds of devices.

AMD Phenom

A 64-bit CPU released in 2007, AMD claimed that the Phenom microprocessor was the first true quad-core design. The Phenom provided a true competitor to Intel’s Core 2 Duo processor and while Intel won the overall sales battle, AMD continued to prove that it could provide the CPU space with a true competitor to Intel’s dominance, albeit a short-lived one. The release of the Phenom II would help AMD find itself with nearly 25% of the CPU space in 2006 before the company would begin to suffer a downfall when it stopped being able to quickly surpass Intel’s CPU performance.

The rest of the first decade of the 2000s was marked by small improvements to both Intel and AMD CPUs. Each company would use this time to create its own set of integrated graphics within its CPUs which is a process widely used to this day. What is notable about this period is that in 1997, only a billion CPUs were sold worldwide. This number has jumped to 10 billion in 2008 indicating the tremendous rise in household computing over this time period.

The 2010s and Beyond

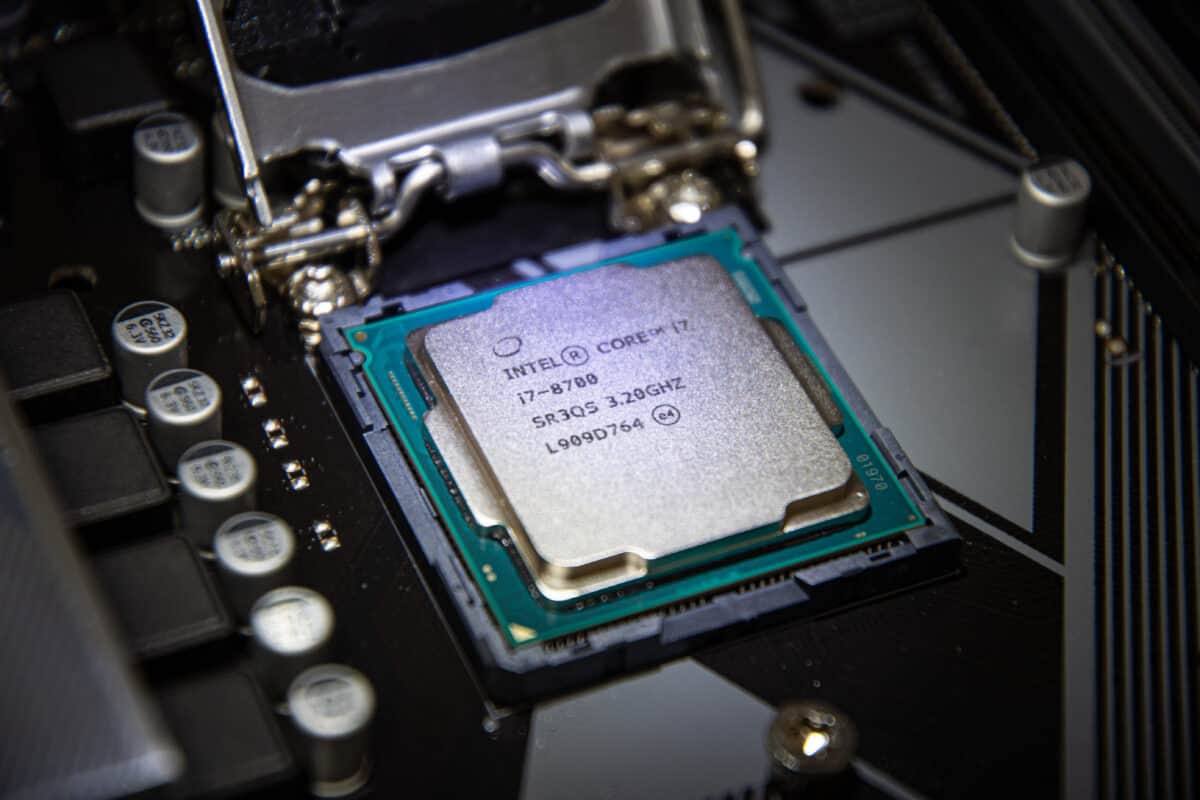

Intel Core i-Series

Intel’s best CPU series released in the very last part of 2008 is still around to this day. The Intel Core i3, i5, and i7 are likely the most used CPU series in the world. The Core i3 is Intel’s low-end processor and is the most notable successor to the performance of the Core 2 Duo CPU series. The i5 and i7 are more popularly regarded as faster processors for multitasking, multimedia performance, and video, and photo editing and that’s especially true of the i7 with its hyperthreading for even faster clock speed.

Over the last decade, Intel has released numerous generations of its i series including Sandy Bridge, Ivy Bridge, Haswell, Broadwell, Skylake, and more. With each generational release, CPU performance has sometimes improved incrementally and other times the increase in performance can be dramatic. With each release, the processors have gotten small enough to be used in computers of all shapes and sizes from small laptops to desktop-sized workstations capable of advanced computing processors.

AMD Zen

After treading a bit of water between 2006 and 2017, AMD would find its footing again with the release of its Zen-based CPUs in 2017. The Zen series has quickly put AMD back on the path to success and it often jockeys back and forth with Intel for the best CPU performance at various price points. Like Intel, it’s also releasing yearly improvements to its Zen series of CPUs with each release setting the stage for significant improvements and continuing to show that you do not have to pay Intel prices for similar performance.

Unlike the earlier days of the CPU world in the 1980s and 1990s, the volume of processor releases has grown significantly. During earlier periods, CPU releases were not nearly as hyped as they are today as power users, in particular, look for the next great thing to advance what today’s computers can do with gaming, 3D, VR, and other advanced technologies like AI.

Final Thoughts

The history of the modern CPU isn’t one to take lightly and as this list touches on the most notable of releases, there are still dozens, if not hundreds of incremental CPU releases over the last 50 years that helped get us to where we are today. Tens of thousands of some of the world’s most brilliant minds have worked tirelessly to bring computers into homes around the world while also powering rocket ships, smartphones, video game systems and so much more.

There’s an idiomatic expression known as the sky’s the limit and that’s likely true of microprocessors today as CPU manufacturers will continue to push the limits of what technology can do. Not only do future CPU developments help Intel and AMD’s bottom line, but they also continue to show what is possible when human ingenuity and a desire to build something great come together.

Want to Retire Early? Start Here (Sponsor)

Want retirement to come a few years earlier than you’d planned? Or are you ready to retire now, but want an extra set of eyes on your finances?

Now you can speak with up to 3 financial experts in your area for FREE. By simply clicking here you can begin to match with financial professionals who can help you build your plan to retire early. And the best part? The first conversation with them is free.

Click here to match with up to 3 financial pros who would be excited to help you make financial decisions.

The image featured at the top of this post is ©Alexander_Safonov/Shutterstock.com.