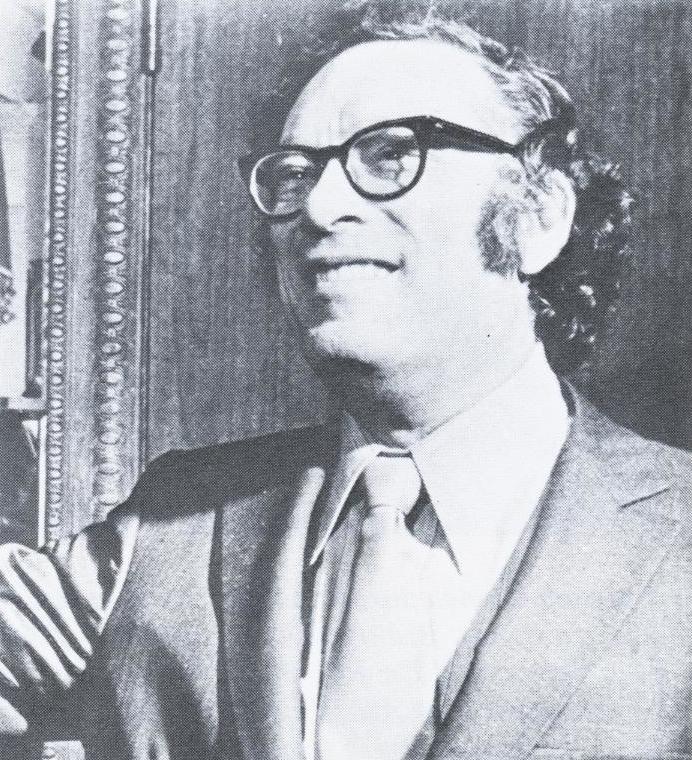

Isaac Asimov: The inventor of Asimov’s Laws of Robotics

What are Asimov’s Laws of Robotics: Complete Explanation

Isaac Asimov, in his fiction science story named, ‘Runaround’ published introduced the Asimov’s Law of Robotics in 1942. Since, he created a story where the robots were handed over a responsibility for governing the whole human cavillation and the planet.

If you are thinking that Asimov’s laws of robotics are scientific laws then you are wrong. They are simple instructions built in all the robots in his stories to keep them from malfunctioning in any which could be dangerous.

Further, since the robots are programmed to perform in a certain way or as per the outcomes of the algorithm, they might end up harming the human civilization or the planet. They do not have empathy or emotions to understand the situation outside any algorithm. Therefore, to ensure that the planet and the people are safe so, he instructed the three laws which became hugely popular.

The appearance in the Three Laws didn’t happened at once, but over a period. Asimov wrote his first two robot stories, “Robbie” and “Reason” with no explicit mention of the Laws. He assumed, however, that robots would have certain inherent safeguards. In “Liar!”, his third robot story, makes the first mention of the First Law but not the other two. All three laws finally appeared together in “Runaround”.

In his short story “Evidence”, published in the September 1946 issue of Astounding Science Fiction, Asimov lets his recurring character Dr. Susan Calvin expound a moral basis behind the Three Laws. Calvin pointed out that human beings are typically expected to refrain from harming other humans (except in times of extreme duress like war, or to save a greater number) and this is equivalent to a robot’s First Law.

Likewise, society expects men to obey instructions from recognized authorities such as doctors, teachers and so forth, which equals the Second Law of Robotics. Lastly men are typically expected to avoid harming themselves which is the Third Law.

Asimov’s Laws quickly grew entwined with science fiction literature, but the author wrote that he shouldn’t receive credit for their creation because the Laws are obvious from the start, and everyone is aware of them subliminally. The Laws just never happened to be put into brief sentences until I managed to do the job. The Laws apply, as a matter of course, to every tool that human beings use.

Moreover, Asimov believed that, ideally, humans would also follow the Laws:

I have my answer ready whenever someone asks me if I think that my Three Laws of Robotics will actually be used to govern the behavior of robots, once they become versatile and flexible enough to be able to choose among different courses of behavior.

My answer is, “Yes, the Three Laws are the only way in which rational human beings can deal with robots—or with anything else.”

—But when I say that, I always remember (sadly) that human beings are not always rational.

A journey of a thousand miles begins with a single step.

Asimov’s Laws of Robotics: An Exact Definition

Asimov’s Three Laws of Robotics, quoted as being from the “Handbook of Robotics, 56th Edition, 2058 A.D.”, are:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

In his later fiction stories, where robots had taken responsibility for government of whole planets and human civilizations (the concept originally appeared in the short story The Evitable Conflict of 1950), Asimov also added a fourth, or zeroth law, to precede the others:

A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Where did Asimov’s Laws of Robotics originate?

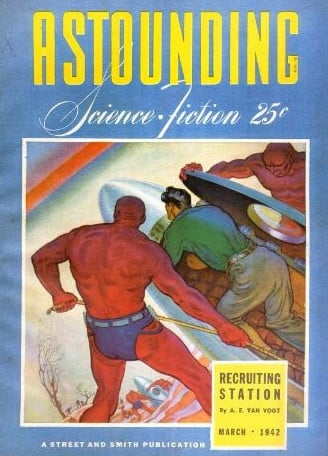

In October 1941 the young American writer Isaac Asimov wrote the science fiction short story “Runaround”, which was first published in the March 1942 issue of Astounding Science Fiction magazine. The story (it later appears in the collections I, Robot (1950), The Complete Robot (1982), and Robot Visions (1990)) featured the recurring characters Powell and Donovan, and introduced his Three Laws of Robotics.

The stated laws create a managing law and unifying theme for the Asimov’s robotic-based fiction which was mentioned in his Robot Series. Further, the stories are connected to its Lucky Starr series of young adult fiction. He incorporated these laws in about all of the positronic robots mentioned in the fiction. The laws can not be bypassed by the laws and are incorporated as a safety feature.

Most of the mentioned robots in the Isaac Asimov writings behaved usually and showed counter-intuitive ways as an unintended situations where the robots apply the Three Laws to protect the planet and human civilization.

What is the Application of Asimov’s Laws of Robotics?

The Asimov’s Law of Robotics was introduced as an added help to humans. Asimov envisioned a world where robots would act like servants and would require some programming rules to prevent them from causing harm.

In the real world, Asimov’s laws are still referred to as a template for guiding our development of robots. With the advancement in technology, people now have a slightly different concept of how robots will look like and how people will interact with them. Many advancements have already produced a wide range of devices, from military drones and autonomous vacuum cleaners to entire factory production lines.

The image featured at the top of this post is ©Rochester Institute of Technology, Public domain, via Wikimedia Commons.